Run Azure Functions in Kubernetes with KEDA

It's possible to package up an Azure Functions App inside a Docker container, which gives you the flexibility to run it on premises, or in another cloud other than Azure, and of course wherever you can run Kubernetes. For instructions on how to get your Azure Function app running in a container, check out my article here.

Introducing KEDA

While it's great that this opens the door to running Azure Functions anywhere, until recently there was one notable drawback to using containerized Azure Function Apps. And that was the fact that the powerful auto-scaling features of the Azure Functions consumption plan are not available. It would be up to you to scale out to the appropriate number of containers.

However, the KEDA project (Kubernetes-based Event Driven Autoscaling component) is designed to solve this problem. With KEDA installed on Kubernetes, you can benefit from auto-scaling, so that additional pods will be created as needed when your Function App is under heavy load, and it can scale right down to zero if your app is idle.

It's still in its early days, and only supports a limited number of triggers, but it's already a great option when you need or want to host your Function Apps on Kubernetes.

Demo scenario

In this post, we're going to take an existing containerized Azure Function App (a very simple one I created as part of my Create Serverless Functions Pluralsight course), and install it onto AKS with KEDA configured.

For this demo I'll be using the Azure CLI from PowerShell, and I've also got the Azure Functions Core Tools installed. I've also got Docker Desktop for Windows installed which includes kubectl.

Step 1 - Create an AKS cluster

First, let's create a new AKS cluster. Of course you don't have to use AKS - you can use Kubernetes hosted anywhere. (by the way, there seems to be a bug with az aks create at the moment which means it can fail if the service principal it creates doesn't get created quickly enough. The workaround is to create your own service principal with az ad sp create-for-rbac --skip-assignment and then use the --service-principal and --client-secret arguments in the call to az aks create)

# create a resource group

$aksrg = "KedaTest"

$location = "westeurope"

az group create -n $aksrg -l $location

# create the AKS cluster

$clusterName = "MarkKedaTest"

az aks create -g $aksrg -n $clusterName --node-count 3 --generate-ssh-keys

That will take about 5 minutes to complete, and once it's done, we fetch the credentials allowing kubectl to talk to it:

# Get credentials for kubectl to use

az aks get-credentials -g $aksrg -n $clusterName --overwrite-existing

# Check we're connected

kubectl get nodes

Step 2 - Install KEDA

Now let's install KEDA onto our AKS cluster. This is done using the Azure Functions Core Tools, and actually installs two things - KEDA which enables autoscaling everything but HTTP triggered functions to zero, and Osiris which enables HTTP triggered functions to also scale to zero.

# install KEDA on this AKS cluster

func kubernetes install --namespace keda

Step 3 - Deploy an Azure Function App

Now let's deploy our Function App. We're going to use this existing containerized Azure Function app, which I created as part of my Create Serverless Functions Pluralsight course. The code is available here on GitHub, and if you want to containerize your own Azure Function app, it's quite a simple process I walk through here.

The command we're going to use to deploy the app is func kubernetes deploy and there are a few different options for how to use this. You can see some of the options in this article here, but I'm going to take a slightly different approach.

Step 3a - Prepare the secrets

By default, the func kubernetes deploy command is going to look for a local.settings.json file and use that to generate a Kubernetes secret containing the environment variables for your container. That might not be exactly what you want, so you are free to point it at your own Kubernetes secret instead.

For this demo, I'm actually going to auto-generate a local.settings.json file with the exact settings I want. In particular I need to set the AzureWebJobsStorage connection string to a real Azure Storage Account connection string, as my demo app uses Table Storage to store the state of TODO items.

I also need to set up a WEB_HOST environment variable, as my function app uses Azure Functions proxies to pass through HTTP requests to some static web resources hosted in blob storage. I've got these publicly available at https://serverlessfuncsbed6.blob.core.windows.net/website so you can use that if you want to try this for yourself.

So here's my PowerShell script to generate a temporary local.settings.json file:

$connStr = az storage account show-connection-string -g "SharedAssets" -n "mystorageaccount" -o tsv

$staticFiles = "https://serverlessfuncsbed6.blob.core.windows.net/website"

@{

"IsEncrypted" = $false;

"Values" = @{

"AzureWebJobsStorage" = $connStr;

"FUNCTIONS_WORKER_RUNTIME" = "dotnet";

"WEB_HOST" = $staticFiles

};

"Host" = @{

"CORS" = "*"

}

} | ConvertTo-Json | Out-File .\local.settings.json -Encoding utf8

Step 3b - Generate the Kubernetes YAML file

Now I'm going to use func kubernetes deploy to generate a Kubernetes YAML file for our Function App. I'll specify the Docker image we want to use, and the --dry-run flag means that it generates the YAML.

$funcDeployment = "keda-demo"

func kubernetes deploy --name $funcDeployment --image-name "markheath/serverlessfuncs:v3" --dry-run > deploy.yml

When this is complete our deploy.yml file will include all the Kubernetes object definitions we need to deploy our Function App to Kubernetes. This includes Base64 encoded versions of the secrets in our local.settings.json files, so make sure you don't check either file into source control.

Step 3c - Deploy the Function App

Now all we need to do is use kubectl apply to create the necessary resources on our Kubernetes cluster:

kubectl apply -f .\deploy.yml

We should see the following resources get created...

secret/keda-demo created

service/keda-demo-http created

deployment.apps/keda-demo-http created

deployment.apps/keda-demo created

scaledobject.keda.k8s.io/keda-demo created

Step 4 - Testing it out

To test this we will need the public IP address of our service

kubectl get service --watch

Eventually the external IP address will appear:

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

keda-demo-http LoadBalancer 10.0.160.79 <pending> 80:32756/TCP 13s

kubernetes ClusterIP 10.0.0.1 <none> 443/TCP 20m

keda-demo-http LoadBalancer 10.0.160.79 131.91.133.243 80:32756/TCP 96s

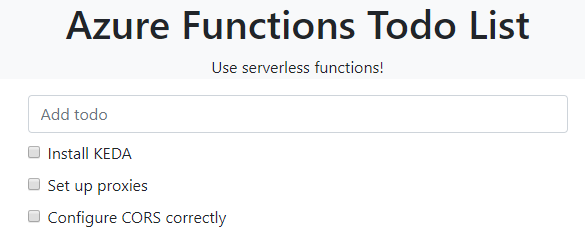

And if we visit that IP address in a browser, we'll see the basic TODO application.

It's not particularly exciting app, but it does have a timer scheduled function that deletes completed TODO items every five minutes, and if we wait a while we can see that works successfully.

Step 4b - Testing scaling

When we deploy our app with KEDA, we actually end up with two deployments - one specifically for the HTTP triggered functions, and the other one to handle all other functions. It would be nice to see these scaling up when the function app is busy, and down to zero when it is idle.

PS C:\Code\azure\keda> kubectl get deployments

NAME READY UP-TO-DATE AVAILABLE AGE

keda-demo 1/1 1 1 109m

keda-demo-http 1/1 1 1 109m

Unfortunately, this particular demo Function App is not ideal for demoing scaling. The keda-demo pod is simply running a timer-triggered function every five minutes, so it will never need to scale up, and won't scale down to zero.

The other pod (keda-demo-http) ought to scale depending on the HTTP traffic, but I've not been able to get it working yet. It might be that there are some issues with HTTP triggered scaling at the moment as I encountered a few bug reports, and the underlying scaling technology is still in preview.

To properly demo KEDA scaling it would be better to have created a Function App based on queue triggered functions. There's a great short demo of KEDA auto-scaling with queues from Jeff Hollan available here (the demo starts 7 minutes in, and the autoscaling happens about 15 minutes in).

Summary

In this post we walked through the basic steps to install KEDA and run a containerized Function App on Azure Kubernetes Service. Although the particular demo app I installed doesn't showcase the benefits of KEDA, it's great that this auto-scaling functionality is now available for anyone hosting their Azure Function Apps in Kubernetes, and I'm looking forward to seeing how it evolves.

A place for me to share what I'm learning: Azure, .NET, Docker, audio, microservices, and more...

A place for me to share what I'm learning: Azure, .NET, Docker, audio, microservices, and more...

Comments

So the whole point of using KEDA or Kubernetes as such is to run Durable Function on premises for those who can't use the public cloud for legal reasons. But how do we create a storage account with tables and queues on premises?

Michael Ringholm SundgaardAs I see it this is used behind the scenes by Durable Functions, is there a way around this, or can't Durable Functions be used on premises without an Azure Storage Account?

Durable Functions is being extended with support for alternative backing stores, e.g. Redis (https://github.com/Azure/du... to better support this scenario

Mark HeathHello i would like to know how serve in aks the functions in https? In local with command func host start --useHttps

BrunoThanks, great article, I get a cors issue when trying to access the API from my external site. I have set the cors to * as you have but when I look at the deploy.yml this does not seem to be included anywhere.

LennyD