Rapid API Development with Azure Functions

The Todo-Backend project showcases the use of various different languages and programming frameworks to implement a simple backend for a todo list application. There are many community contributed implementations which is a great way to see a simple example of what’s involved in setting up a basic web API with each technology.

I noticed that there was no Azure Functions implementation in the list, and since Azure Functions is an ideal platform to rapidly prototype and host a simple web API, I thought I’d give it a try.

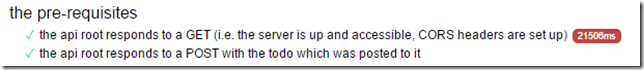

One really nice feature of the Todo-Backend project is that it has an online test runner which you simply need to point at your own web API and then keep implementing endpoints until all the tests pass.

Spoiler alert: you’ll end up creating a GET all and GET by id, a POST to create a new todo item, a PATCH to modify one, and a DELETE all and DELETE by id.

With Azure Functions, it’s possible to create one single function that responds to multiple HTTP verbs, but it’s a better separation of concerns to have a separate function per use case, much like you’d have a separate method in an ASP.NET MVC controller for each of these actions.

To keep things interesting, I decided I’d use a mix of languages, and implement two functions in C#, two in JavaScript and two in F#.

Creating a Function App

There are several ways to create a function app. I created a new resource group in the portal, and added a function app using the consumption plan. I then used the Azure Functions CLI tooling locally to create a new function app with func init. This initialises the current folder with the necessary files including a host.json file, an appSettings.json for local settings and gives you a Git repository complete with a .gitignore file configured for Azure Function Apps.

Function 1: GET All Todo Items (JavaScript)

The first function I needed to create was a get all todos method. I used func new to create a new function and chose JavaScript as the language and selected a HTTP triggered function.

There are a few things we’ll need to do in all the function.json files for our functions. The first is to set the authentication level to anonymous. This todo API is just a simple demo and so has no concept of users.

Second, we need to specify the allowed methods for this function. In this case it’s simply GET.

Third, we need to specify the route. Normally a HTTP triggered function derives its route from the function name, but we’re going to have several functions all sharing the /api/todos endpoint. So for this function, we just need to specify todos/ (the api prefix is automatically included)

Here’s the part of the function.json file describing the HTTP input binding for our get all todos function:

"bindings": [

{

"authLevel": "anonymous",

"type": "httpTrigger",

"direction": "in",

"name": "req",

"methods": [

"GET"

],

"route": "todos/"

},

We also need to pick somewhere to store our todos. Now I did blog a while ago about how you could cheat and use memory caches with Azure Functions for very rough and ready prototyping, but I wanted a proper backing store, and Azure Table storage is an ideal choice because its cheap, extensible, and we already have an Azure Storage Account with every Function App anyway.

So my get all todos method also has an input binding which will take up to 50 rows from the todos table and return them in the todostable function parameter. Here’s the part of function.json for the table storage input binding:

{

"type": "table",

"name": "todostable",

"tableName": "todos",

"take": 50,

"connection": "AzureWebJobsStorage",

"direction": "in"

}

Now onto our function itself, and JavaScript really is a great language choice for this as the input data is almost in the ideal form for the HTTP response straight away. I decided to hide the PartitionKey and RowKey fields, but apart from that we pass straight through:

module.exports = function (context, req, todostable) {

context.log("Retrieved todos:", todostable);

todostable.forEach(t => { delete t.PartitionKey; delete t.RowKey; });

res = {

status: 200,

body: todostable

};

context.done(null, res);

};

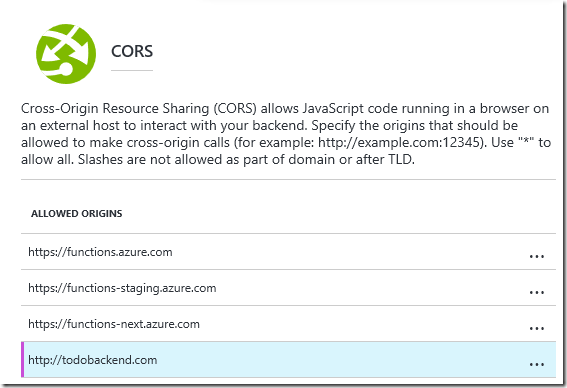

One important thing before this will work. We must set up CORS if we want to use the Todo-Backend test client and spec runner against our function app. We can do this in the portal, and we simply need to add http://todobackend.com to the list:

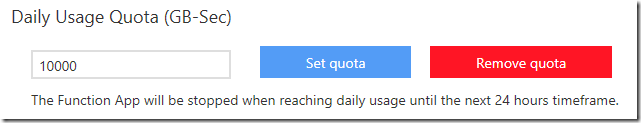

I also set up a daily usage quota for my function app. This means that if any malicious user attempts to put me out of pocket by hammering my function app with requests, it will simply stop running until the next day, which is absolutely fine for this function app:

So that gets us past the first test for the backend-todo API. To pass the next test we need to support POST.

Function 2: POST a New Todo Item (F#)

For my POST function, I decided to use F#. The function bindings are similar to the GET function, except now we only allow the POST method, and we are using an output binding to table storage instead of an input binding.

"bindings": [

{

"authLevel": "anonymous",

"name": "req",

"type": "httpTrigger",

"direction": "in",

"methods": [

"POST"

],

"route": "todos/"

},

{

"name": "res",

"type": "http",

"direction": "out"

},

{

"type": "table",

"name": "todosTable",

"tableName": "todos",

"connection": "AzureWebJobsStorage",

"direction": "out"

}

],

I used func new to give me a templated HTTP triggered F# function, which gives us a good starting point for a function that uses Newtonsoft.Json for serialization. Our function needs to deserialize the body of the request, give the new todo item an Id (I used a Guid), save it to table storage, and then serialize the todo item back to JSON for the response.

I declared the following Todo type, which could serve the dual purpose of being used as a Table Storage entity (which needs a RowKey and PartitionKey). In the world of F# an optional integer would normally be expressed as an int option, but that doesn’t play nicely with Newtonsoft JSON serialization, so I switched to a regular Nullable<int>.

type Todo = {

[<JsonIgnore>]

PartitionKey: string;

[<JsonIgnore>]

RowKey: string;

id: string;

title: string;

url: string;

order: System.Nullable<int>;

completed: bool

}

The templated function uses an async workflow which is a syntax I’m still not completely at home with, but with the exception of needing a special helper to await a regular Task (instead of a Task<T>), it wasn’t too hard.

The rows in our table use a hardcoded PartitionKey of TODO. It could use a user id if it was storing todos for many people. I also decided for simplicity to create the url for each todo item at creation time and store it in table storage, but arguably that should be done at retrieval time.

Here’s the Run method for our POST todo item function:

let Run(req: HttpRequestMessage, log: TraceWriter, todosTable: IAsyncCollector<Todo>) =

async {

let! data = req.Content.ReadAsStringAsync() |> Async.AwaitTask

log.Info(sprintf "Got a task: %s" data)

let todo = JsonConvert.DeserializeObject<Todo>(data)

let newId = Guid.NewGuid().ToString("N")

let newUrl = req.RequestUri.GetLeftPart(UriPartial.Path).TrimEnd('/') + "/" + newId;

let tableEntity = { todo with PartitionKey="TODO"; RowKey=newId; id=newId; url=newUrl }

let awaitTask = Async.AwaitIAsyncResult >> Async.Ignore

do! todosTable.AddAsync(tableEntity) |> awaitTask

log.Info(sprintf "Table entity %A." tableEntity)

let respJson = JsonConvert.SerializeObject(tableEntity);

let resp = new HttpResponseMessage(HttpStatusCode.OK)

resp.Content <- new StringContent(respJson)

return resp

} |> Async.RunSynchronously

So that means we’ve passed two out of the 16 tests. The next test is to DELETE all todos.

Function 3: DELETE all Todo Items (C#)

For our third function, we need to respond to a DELETE method by deleting all Todo items! This isn’t something that’s straightforward to do with the Azure Functions table storage bindings at the moment, but this article from Anthony Chu pointed me in the right direction.

We create a Table Storage input binding in the same way we did for our GET function, and in the Run method signature, we use a CloudTable object to bind to the table.

Unfortunately, there is no method on CloudTable that deletes all rows from the table. We could delete the whole table and recreate it, and that might be the quickest way, but I opted to perform a simple query to get all Todos, and loop through and delete them individually. Obviously this assumes there are only a small number of items in the table, and I’m pretty sure that the TableQuery has a max number of rows it returns anyway.

Here’s the code for my delete function:

class Todo : TableEntity

{

public string title { get; set; }

}

public static HttpResponseMessage Run(HttpRequestMessage req, CloudTable todosTable, TraceWriter log)

{

log.Info("Request delete all todos");

var allTodos = todosTable.ExecuteQuery<Todo>(new TableQuery<Todo>())

.ToList();

foreach(var todo in allTodos) {

log.Info($"Deleting {todo.RowKey} {todo.title}");

var operation = TableOperation.Delete(todo);

todosTable.Execute(operation);

}

return req.CreateResponse(HttpStatusCode.OK);

}

Now at this stage we pass a few more of the 16 tests, but we fail because when the url we set up for each todo is called, there’s nothing listening. We need a get by id function next.

Function 4: GET Todo Item by Id (JavaScript)

We’re back to JavaScript for this function, and there’s a slight difference in our routes now, as we expect an id, so the route for this function is todos/{id}. We’re also going to use a table input binding again, but this time, instead of getting all rows, we’re going to say that the partitionKey must be TODO and the rowKey must be the id we were passed in the URL. So the function binding for table storage looks like this:

{

"type": "table",

"name": "todo",

"tableName": "todos",

"partitionKey": "TODO",

"rowKey": "{id}",

"take": 50,

"connection": "AzureWebJobsStorage",

"direction": "in"

}

The implementation is pretty simple. If the row was found we return a 200 after hiding the row and partition keys, otherwise we return a 404 not found.

module.exports = function (context, req, todo) {

context.log("Retrieving todo", req.params.id, todo);

if (todo) {

delete todo.RowKey;

delete todo.PartitionKey;

res = {

status: 200,

body: todo

};

}

else {

res = {

status: 404,

body: todo

};

}

context.done(null, res);

};

Next up we need to support modifying an existing todo with the PATCH method.

Function 5: PATCH Todo Item (C#)

The PATCH function borrows techniques we saw with the POST and GET by id functions. Like GET by id, we’ll use a route of todos/{id} and a Table Storage input binding set up to find that specific row. We’ll bind to that as a custom strongly typed Todo object.

And like the POST method, our HTTP request and response both contain JSON and we need to write to table storage.

So I read the input JSON as a JObject, and we will support modification of the title, order and completed properties only. Once we’ve patched our Todo object, we can update it in table storage with a TableOperation.Replace operation.

I then serialize an anonymous object to the output which is an easy way of hiding the row and partition keys.

Here’s the code for my patch todo item function:

#r "Microsoft.WindowsAzure.Storage"

#r "Newtonsoft.Json"

using Microsoft.WindowsAzure.Storage.Table;

using System.Net;

using Newtonsoft.Json;

using Newtonsoft.Json.Linq;

public class Todo: TableEntity

{

public string id { get; set; }

public string title { get; set; }

public string url { get; set; }

public int? order { get; set; }

public bool completed { get; set; }

}

public static async Task<HttpResponseMessage> Run(HttpRequestMessage req, string id, Todo todo, CloudTable todoTable, TraceWriter log)

{

log.Info($"Patching {id}");

if (todo == null) return new HttpResponseMessage(HttpStatusCode.NotFound);

var patch = await req.Content.ReadAsAsync<JObject>();

log.Info($"Patching with id={patch["id"]}|title={patch["title"]}|url={patch["url"]}|order={patch["order"]}|completed={patch["completed"]}|");

if (patch["title"] != null)

todo.title = (string)patch["title"];

if (patch["order"] != null)

todo.order = (int)patch["order"];

if (patch["completed"] != null)

todo.completed = (bool)patch["completed"];

//todo.ETag = "*";

var operation = TableOperation.Replace(todo);

await todoTable.ExecuteAsync(operation);

var resp = new HttpResponseMessage(HttpStatusCode.OK);

var json = JsonConvert.SerializeObject(new { todo.id, todo.title, todo.order, todo.completed, todo.url });

resp.Content = new StringContent(json);

return resp;

}

We’re almost there! Just need to support deleting an individual todo item.

Function 6: DELETE Todo Item by Id (F#)

So finally we need to support deleting todo items by Id and we’ll use F# for this method. Once again we’ll need a route of todos/{id} and we’re using a table storage input binding of a CloudTable. This will allow us to use the TableOperation.Delete operation. We do need to specify an ETag of * for this to work. The operation doesn’t care if the item to be deleted doesn’t exist so this method always returns a 204 no content.

Here’s the Run method:

let Run(req: HttpRequestMessage, id: string, todosTable: CloudTable, log: TraceWriter) =

async {

log.Info(sprintf "Request delete of todo %s." id)

let todo = TableEntity("TODO", id)

todo.ETag <- "*"

let operation = TableOperation.Delete(todo)

let awaitTask = Async.AwaitIAsyncResult >> Async.Ignore

do! todosTable.ExecuteAsync(operation) |> awaitTask;

// returns success even if TODO doesn't exist

return req.CreateResponse(HttpStatusCode.NoContent);

} |> Async.RunSynchronously

Testing it Out

It’s quite easy to test your function app either locally or in the cloud. If you want to test locally, then you’ll need to provide a connection string for the local functions host to use. You can put this into your appSettings.json file by calling:

func settings add AzureWebJobsStorage "DefaultEndpointsProtocol=https;AccountName=<YOURSTORAGEAPP>;AccountKey=<YOURACCOUNTKEY>"

And then you can just use func host start to start the runtime, and try calling your API with Postman.

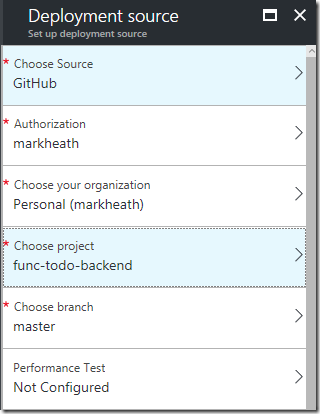

But it’s also really easy to test in the cloud. If you’ve created a Function App, then you can set up Git continuous deployment which is in my opinion the easiest way to deploy Function Apps. I’ve got all the code for this example hosted on GitHub, so I simply had to point at my public GitHub repo:

Once you’ve done this, simply pushing to your repository allows you to go live (you can choose another branch if you don’t want every change to master to go live).

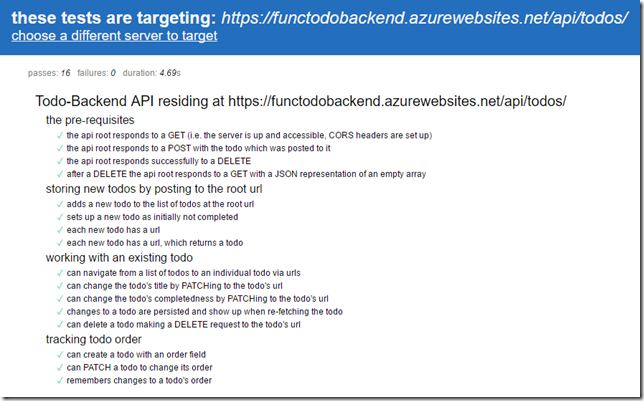

This means I can run the unit tests directly against my Azure Functions app simply by visiting this link. If all is well, we’ll see 16 tests passing:

One thing this does highlight is how poor Azure Functions can be at a cold start. Often after leaving my app dormant for a while it will take 20-30 seconds before the first API call responds.

But once it’s warmed up, the test suite will complete in a few seconds. Hopefully the Azure Functions team will continue to work on improving cold start times, but one trick you can use is to use a scheduled function to keep your app warm, if your use case requires it.

Summary

In this example we’ve seen how easy it is to create a Azure Function App that implements a simple API. We can use multiple functions for each HTTP method and use custom routes to present the API endpoints we want to. We can mix and match different programming languages and Table Storage offers a cheap and easy way to persist data.

This whole API took only a couple of hours to implement, and the great thing is that it will cost very little to run. In fact, it’s very likely that all the usage will fall within the generous monthly free grant of 1 million executions and 400,000GB-s that you get with Azure Functions.

If you want to see the full code and bindings for all six functions, you can find it here on my GitHub account: https://github.com/markheath/func-todo-backend

A place for me to share what I'm learning: Azure, .NET, Docker, audio, microservices, and more...

A place for me to share what I'm learning: Azure, .NET, Docker, audio, microservices, and more...